From Architecture Vision to a Production-Ready Implementation Template

Introduction

As Power BI adoption grows across business domains, security quickly becomes one of the hardest problems to scale. Many organizations start with good intentions but end up with duplicated datasets, inconsistent access rules, manual fixes, and performance bottlenecks—especially when hierarchical access is involved.

This blog presents a complete, ready-to-publish reference architecture and implementation template for Entity-based Row-Level Security (RLS) in Power BI. It combines conceptual design, governance principles, and a hands-on implementation blueprint that teams can reuse across domains such as revenue, cost, risk, and beyond.

The goal is simple:

👉 One conformed security dimension, one semantic model, many audiences—securely and at scale.

Executive Summary

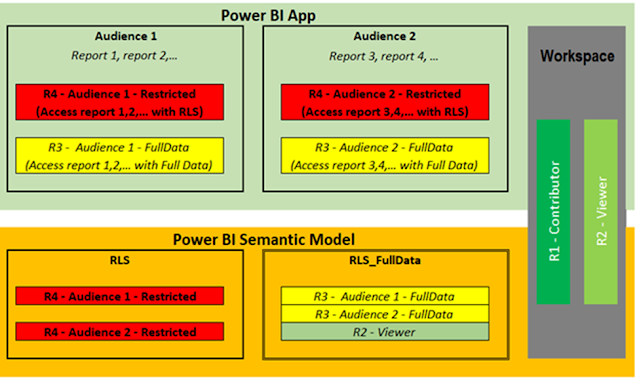

The proposed solution standardizes on Entity as the single, conformed security dimension (SCD Type 1) driving dynamic RLS across Power BI datasets.

User access is centrally managed in the Business entity interface application, synchronized through ETL, and enforced in Power BI using native RLS. Where higher-level access is required, Department-based static roles complement the dynamic model. A FullData role supports approved no-security use cases—without duplicating datasets.

The result is a secure, scalable, auditable, and performance-friendly Power BI security framework that is production-ready.

Why Entity-Based Security?

Traditional Power BI security implementations often suffer from:

-

Dataset duplication for different audiences

-

Hard-coded filters and brittle DAX

-

Poor performance with deep hierarchies

-

Limited auditability and governance

An Entity-based security model solves these problems by:

-

Introducing a single conformed dimension reused across all facts

-

Separating entitlements from data modeling

-

Supporting both granular and coarse-grained access

-

Scaling naturally as new domains and fact tables are added

Entity becomes the language of access control across analytics.

End-to-End Security Flow

At a high level, the solution works as follows:

-

Business entity interface application maintains user ↔ Entity entitlements

-

ETL processes refresh:

-

Dim_Entity(SCD1) -

Entity_Hier(hierarchy bridge) -

User_Permission(effective access)

-

-

Power BI binds the signed-in user via

USERPRINCIPALNAME() -

Dynamic RLS filters data by Entity and all descendants

-

Department-based static roles provide coarse-grained access

-

RLS_FullData supports approved no-security audiences

All of this is achieved within a single semantic model.

Scope and Objectives

This framework applies to:

-

Power BI datasets and shared semantic models

-

Fact tables such as:

-

Fact_Revenue

-

Fact_Cost

-

Fact_Risk

-

Fact_[Domain] (extensible)

-

-

Entity-based and Department-based security

-

Centralized entitlement management and governance

Out of scope:

-

Database-level RLS

-

Report-level or app-level access configuration

The focus is dataset-level security governance.

Canonical Data Model

Core Tables

Dimensions & Security

-

Dim_Entity– conformed Entity dimension (SCD1) -

Entity_Hier– parent-child hierarchy bridge -

User_Permission– user ↔ Entity entitlement mapping -

Dim_User(optional) – identity normalization

Facts

-

Fact_Revenue -

Fact_Cost -

Fact_Risk -

Fact_[Domain]

Dim_Entity (SCD Type 1)

-

Current-state only

-

One row per Entity

-

No historical tracking

Entity_Hier (Bridge)

-

Pre-computed in ETL

-

Includes self-to-self rows

-

Optimized for hierarchical security expansion

User_Permission

-

Source of truth: Business entity interface application

-

No calculated columns

-

Fully auditable

Fact Tables (Standard Pattern)

Example: Fact_Revenue

Rule:

Every fact table must carry a resolvable Entity_Key.

Relationship Design (Strict by Design)

-

Single-direction relationships only

-

No bi-directional filters by default

-

Security propagation handled exclusively by RLS

This avoids accidental over-filtering and performance regressions.

Row-Level Security Design

1. Dynamic Entity-Based Role

Role name: RLS_Entity_Dynamic

Grants

-

Explicit Entity access

-

All descendant Entities automatically

2. Department-Based Static Role

Role name: RLS_Department_Static

Used for:

-

Executive access

-

Oversight and aggregated reporting

3. No-Security Role

Role name: RLS_FullData

-

Applied only to approved security groups

-

Uses disconnected security dimensions

-

No dataset duplication

ETL Responsibilities and Governance

ETL is responsible for:

-

Maintaining

Dim_Entityas SCD1 -

Regenerating

Entity_Hieron Entity changes -

Synchronizing

User_Permissionentitlements -

Capturing freshness timestamps

Mandatory data quality checks

-

Orphan Entity keys

-

Missing Entity mapping in facts

-

Stale entitlement data

Governance dashboards in Power BI surface:

-

Users without access

-

Orphaned permissions

-

Entity coverage by fact table

Handling Non-Conforming Data

Not all datasets are perfect. This framework addresses reality by:

-

Cataloguing fact tables lacking Entity keys

-

Introducing mapping/bridge tables via ETL

-

Excluding unresolved rows from secured views

-

Enforcing coverage targets (e.g., ≥ 99.5%)

Security integrity is preserved without blocking delivery.

Deployment & Rollout Checklist

Dataset

-

RLS roles created and tested

-

Relationships validated

-

No calculated security tables

Security

-

All Viewer/App users assigned to a role

-

Dataset owners restricted

-

FullData role explicitly approved

Testing

-

Parent Entity sees children

-

Multiple Entity grants = union

-

No entitlement = no data

-

Deep hierarchy performance validated

Benefits Realized

This combined architecture and implementation delivers:

-

One conformed security dimension

-

One semantic model for all audiences

-

Strong auditability and governance

-

Predictable performance at scale

-

A future-proof template for new domains

Security moves from a tactical concern to a strategic platform capability.

Closing Thoughts

Entity-based Row-Level Security is not just a Power BI technique—it is a modeling discipline. By separating entitlements from facts, pre-computing hierarchies, and enforcing consistent patterns, organizations can scale analytics securely without sacrificing agility or performance.

This reference architecture and implementation template is ready for rollout, ready for reuse, and ready for governance.