1. Problems

When loading large data volume from SQL Server to Power BI desktop, we could experience three problems below:

1) The refresh process takes a very long time; it could be hours, even days. In addition, the local PC has to be powerful enough with a good CPU and extensive memory. Otherwise, we could experience PC crashes.

2) As the pbix file is huge when refreshed, it will also take a long time to publish pbix file to the Power BI service. In addition, it will depend on the network speed between our local PC and Azure cloud.

3) As Power BI developers, we could become even more frustrated, as the Power Query could become extremely slow to transform the data.

Overall, we couldn’t even work with such a large data volume at all.

2. Analysis and solutions

This is a big topic of reducing the size of the Power BI dataset, such as using incremental refresh, eliminating unused columns, aggregating data at a high level, and removing auto time intelligence. These solutions are reasonable to implement; however, what to do if we can’t implement any of these solutions due to business requirements. This document will provide a practical solution to resolve this issue. While you can use the link to check answers from remote files, this document handles data from the SQL server.

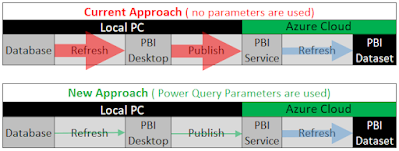

The idea is to let Azure cloud refresh the Power BI dataset while using a small dataset to do Power BI transformation and modelling. The diagram below illustrates the objective

Our goal is to get PBI Dataset ready to support Power BI report authors using the shared dataset concept.

The key is to use Power Query parameters to limit the Power BI desktop data. The table below shows steps in detail

3. Implementation Approach 1 – Dynamic SQL statement

This solution below is compelling, as we can generate any SQL as needed. The syntax in Advanced Power Query editor to generate SQL is similar to Excel functions. In other words, we can quickly create any SQL statement, regardless of whether SQL is complicated or not, with Power Query parameters.

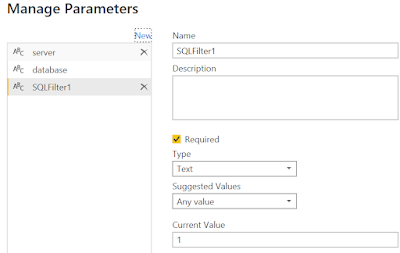

1) Define 3 parameters in Power Query. What is needed is the parameter SQLFilter1 only, but it is best to practice having both database and server as parameters to make the dataset easy to promote.

Define SQLFilter1 as text to be easy to put it to SQL query. (However, you can define any type as needed, but you may need to convert it into text in the SQL statement.)

2) Define a native query, and Make query with “WHERE” or “AND”, such as

SELECT

Branch_ID

.............

FROM TableX

Where

((0 = 1) OR (Division_ID

= 1))

Or

SELECT

Branch_ID

.............

FROM TableX

Where

((0 = 0) OR (Division_ID

= 0))

(Actually, you can write any SQL conditions as needed)

Run it with SQL management studio to ensure SQL is working and retrieve all data when the parameter is 0.

3) Open Power Query advanced editor, and put the parameter into SQL query as below

((0 = "& SQLFilter1 &") OR (Division_ID = "& SQLFilter1 &"))

4) You may get a continuous warning message to approve a native query security question. Go to options to disable require user approval for new native database queries.

5) Test this parameter to get the number of records such as

Number Of Records = COUNTROWS('Table1')

6) Choose parameter(s) and publish this report ( or shared dataset) to PBI service

7) Change parameters from whatever value to 0, which should load all data. You can only do it if you are the user for the data source with server and database specified.

8) Connect reports with this published dataset

4 Implementation Approach 2 - Filter data based on Power Query

The solution above is extremely flexible, however, it doesn’t work against Power BI deployment pipelines with data source rules. Power BI engine behind the scene doesn’t know how to parser dynamic SQL with Power BI deployment pipelines.

Another solution could handle the same concept by using use Power Query.

= Table.SelectRows(Source, each ( (PQFilter1<>null and [Branch_ID] = PQFilter1) or (PQFilter1=null)) )

5 Implementation Approach 3 – SQL query switch

let

Source = if ParameterIsDesktop = "YES" then Sql.Database……

else Sql.Database….)

in

Source

6 Limitations with deployment pipelines

If we have decided to use the Power BI deployment pipeline to deploy Power BI dataset, we have figured out two limitations with Power BI development below:

1) It doesn't support dynamic SQL. However, we can use the Power Query filter to resolve this issue, described in the last section

2) It doesn't support parameters with the data source

When promoting a dataset, the most helpful feature is to define database connection and parameters rule. The detailed steps are below

step 1: Deploy the dataset to TEST; you need to deploy to enable you to change the rule.

Step 2: Change the connection rule for the dataset. At this moment, the rule is not changed yet.

Step 3: Redeploy the dataset again

step 4: Now you can go gateway to enable connection to TEST database

No comments:

Post a Comment